The Tensor Product, Demystified

Previously on the blog, we've discussed a recurring theme throughout mathematics: making new things from old things. Mathematicians do this all the time:

- When you have two integers, you can find their greatest common divisor or least common multiple.

- When you have some sets, you can form their Cartesian product or their union.

- When you have two groups, you can construct their direct sum or their free product.

- When you have a topological space, you can look for a subspace or a quotient space.

- When you have some vector spaces, you can ask for their direct sum or their intersection.

- The list goes on!

Today, I'd like to focus on a particular way to build a new vector space from old vector spaces: the tensor product. This construction often come across as scary and mysterious, but I hope to help shine a little light and dispel some of the fear. In particular, we won't talk about axioms, universal properties, or commuting diagrams. Instead, we'll take an elementary, concrete look:

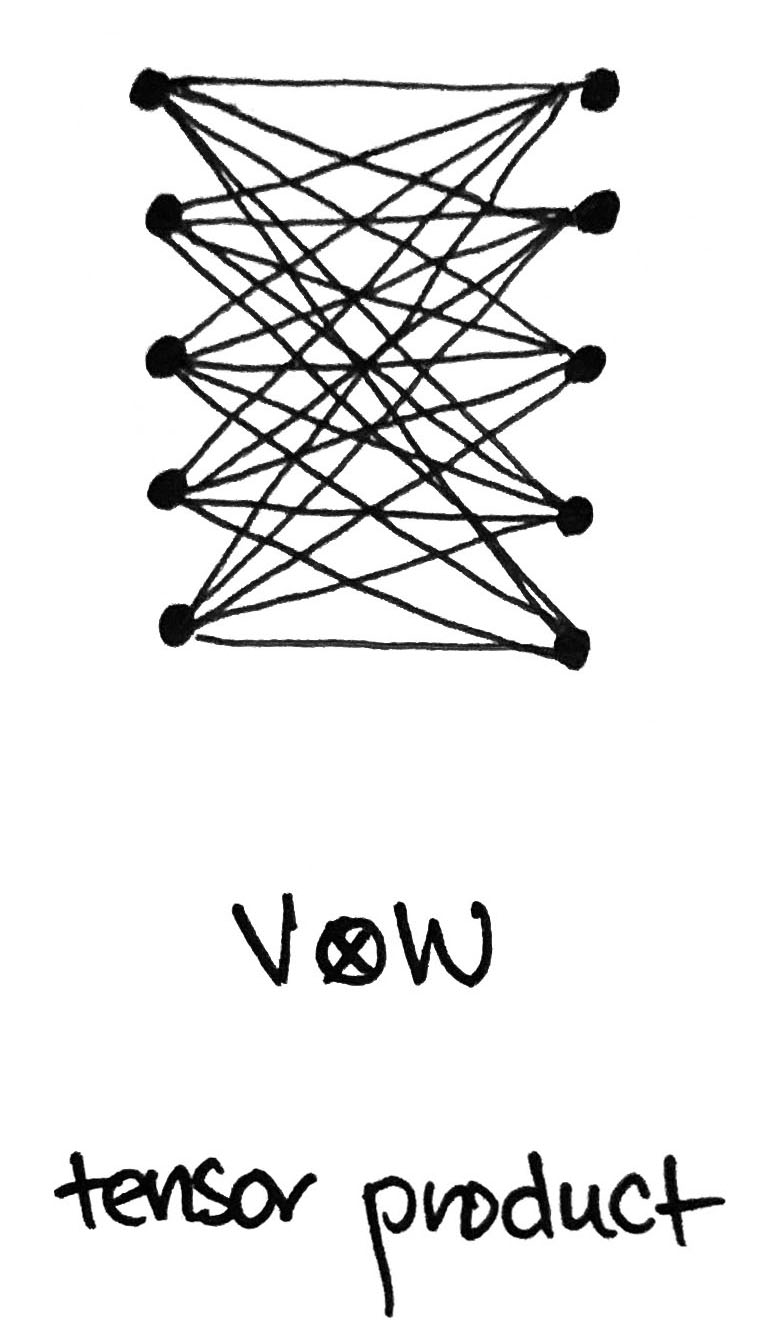

Given two vectors and , we can build a new vector, called the tensor product . But what is that vector, really? Likewise, given two vector spaces and , we can build a new vector space, also called their tensor product . But what is that vector space, really?

Making new vectors from old

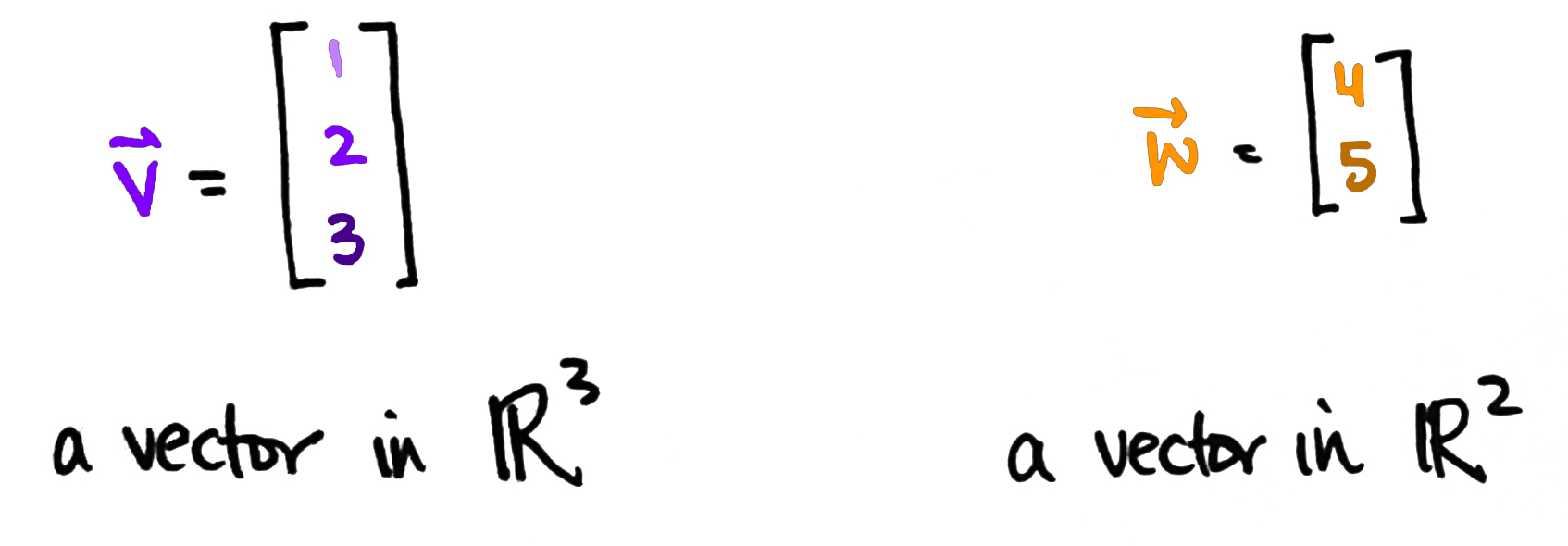

In this discussion, we'll assume and are finite dimensional vector spaces. That means we can think of as and as for some positive integers and . So a vector in is really just a list of numbers, while a vector in is just a list of numbers.

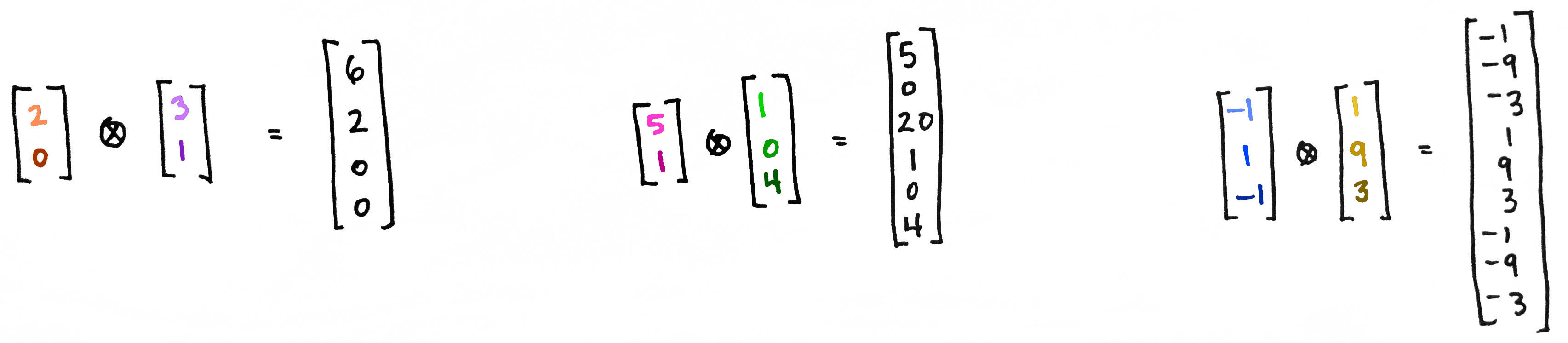

Let's try to make new, third vector out of and . But how? Here are two ideas: We can stack them on top of each other, or we can first multiply the numbers together and then stack them on top of each other.

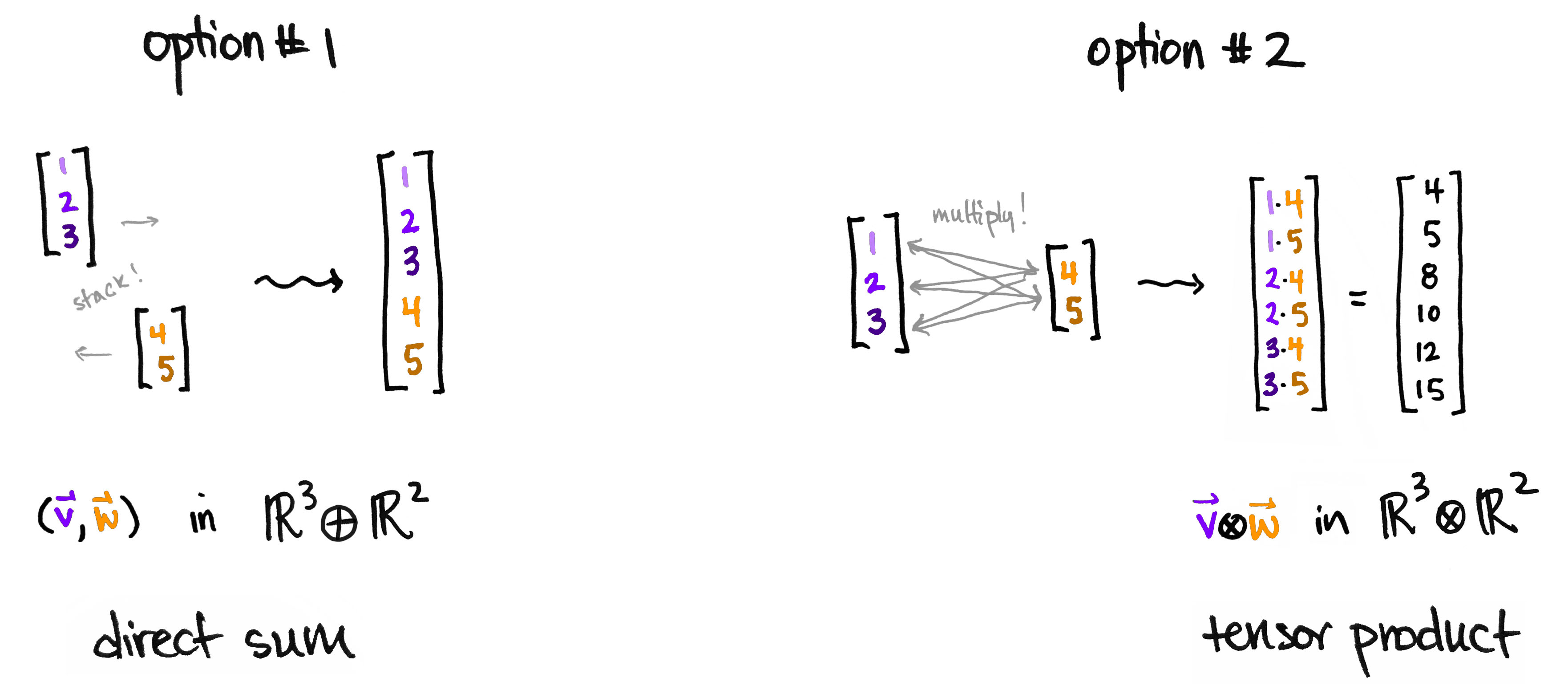

The first option gives a new list of numbers, while the second option gives a new list of numbers. The first gives a way to build a new space where the dimensions add; the second gives a way to build a new space where the dimensions multiply. The first is a vector in the direct sum (this is the same as their direct product ); the second is a vector in the tensor product .

And that's it!

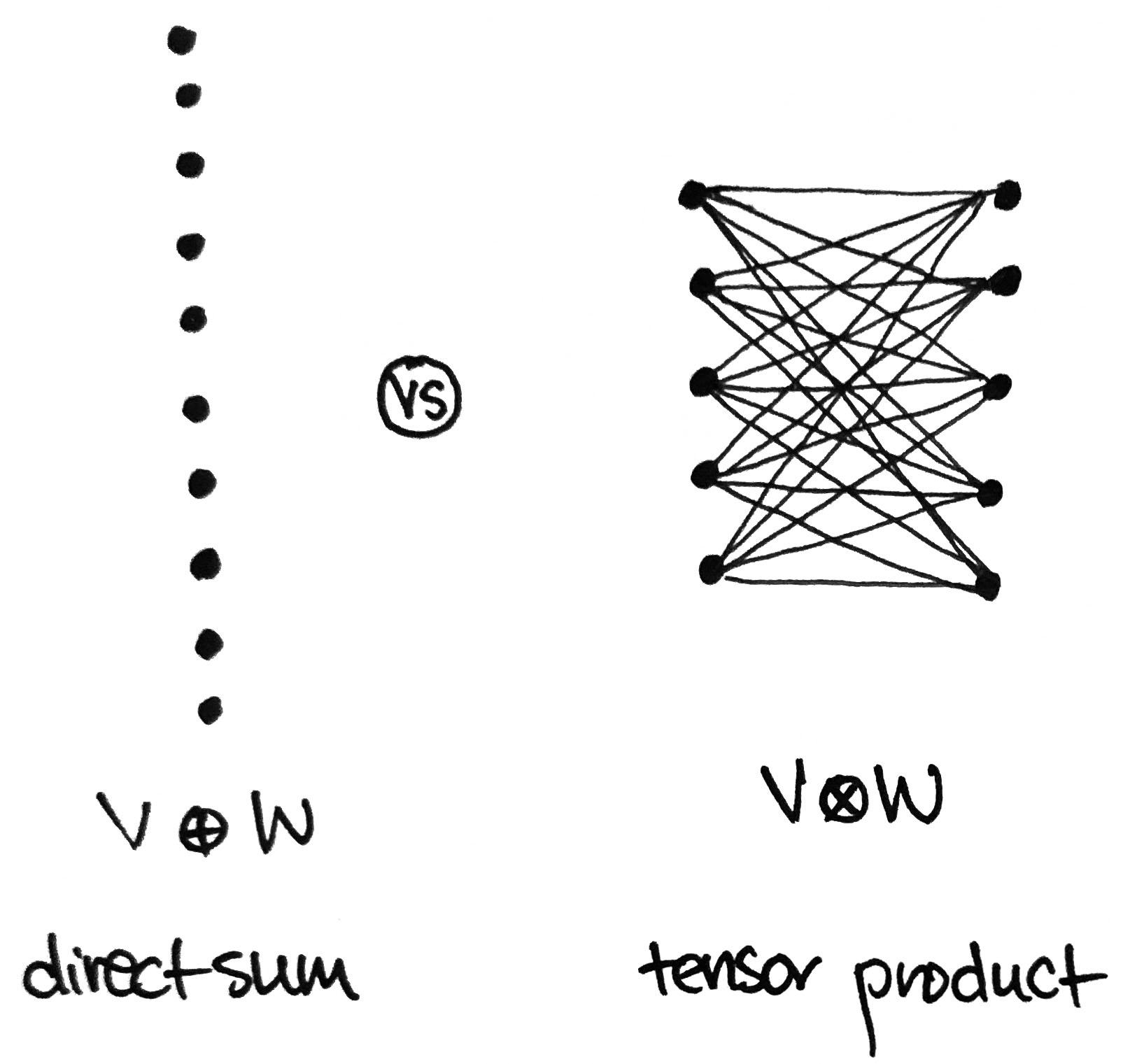

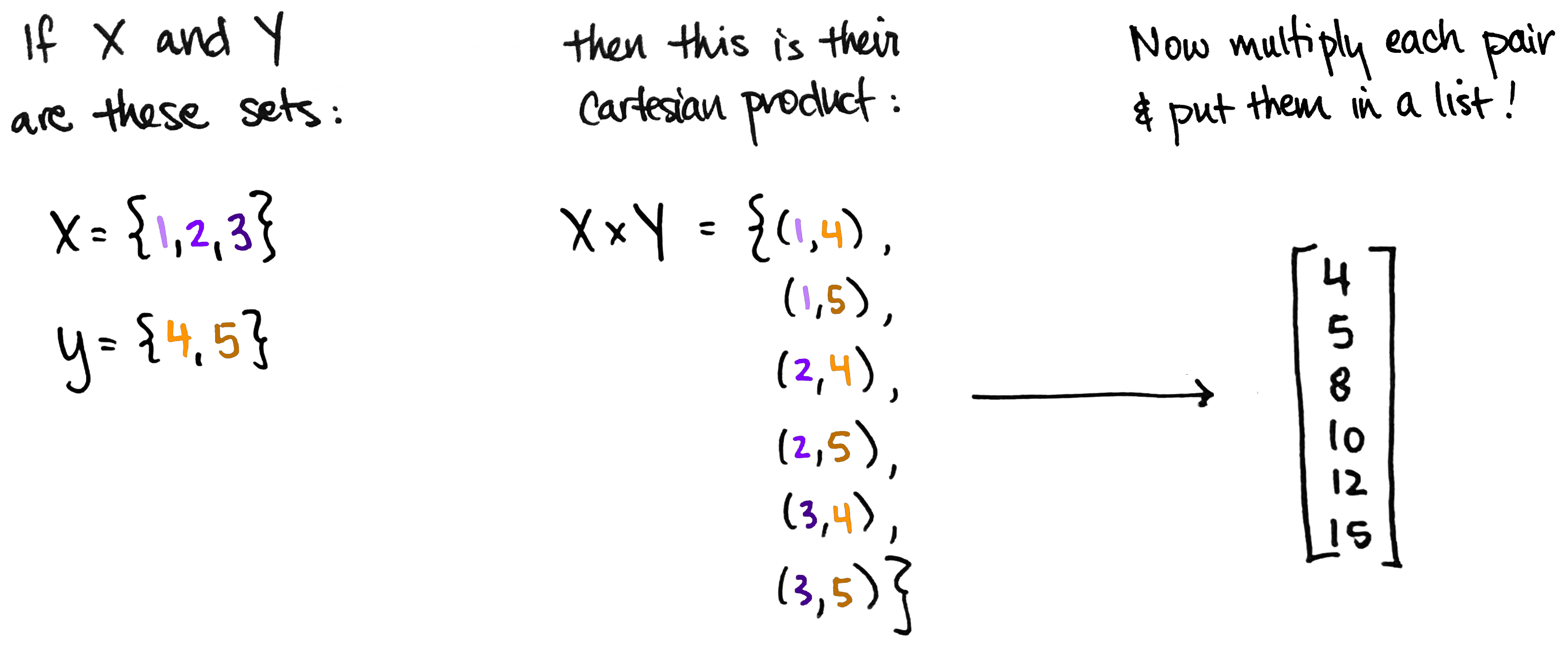

Forming the tensor product of two vectors is a lot like forming the Cartesian product of two sets . In fact, that's exactly what we're doing if we think of as the set whose elements are the entries of and similarly for .

So a tensor product is like a grown-up version of multiplication. It's what happens when you systematically multiply a bunch of numbers together, then organize the results into a list. It's multi-multiplication, if you will.

There's a little more to the story.

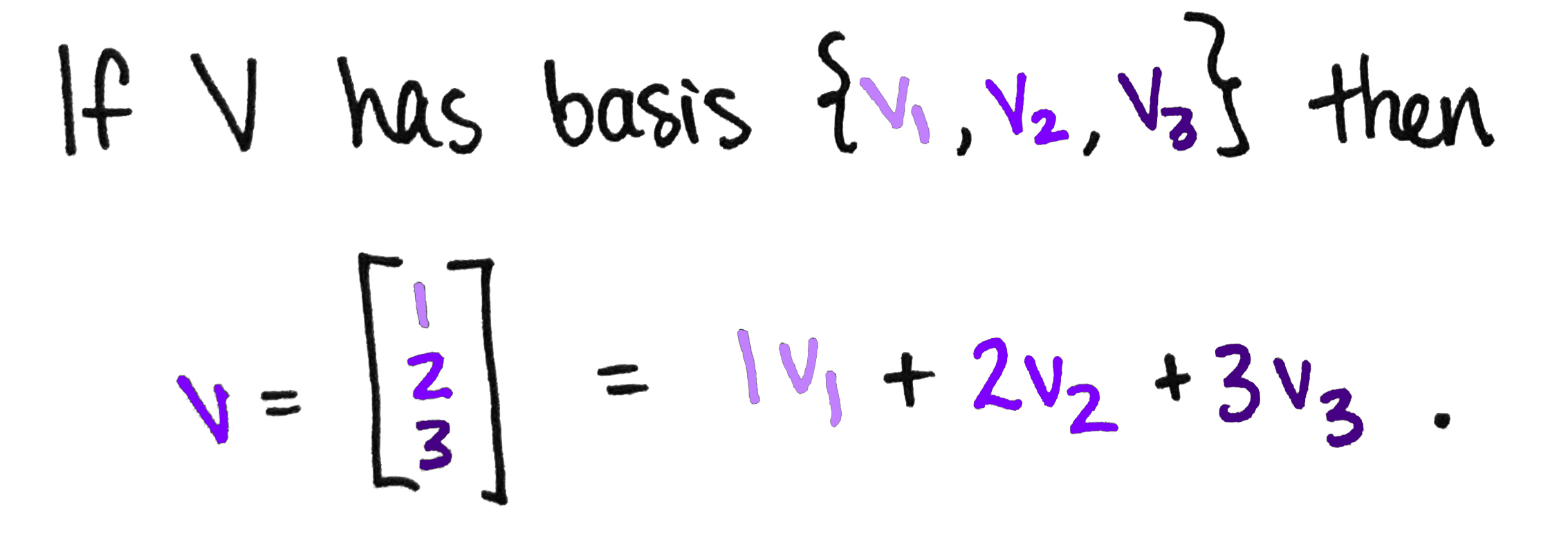

Does every vector in look like for some and ? Not quite. Remember, a vector in a vector space can be written as a weighted sum of basis vectors, which are like the space's building blocks. This is another instance of making new things from existing ones: we get a new vector by taking a weighted sum of some special vectors!

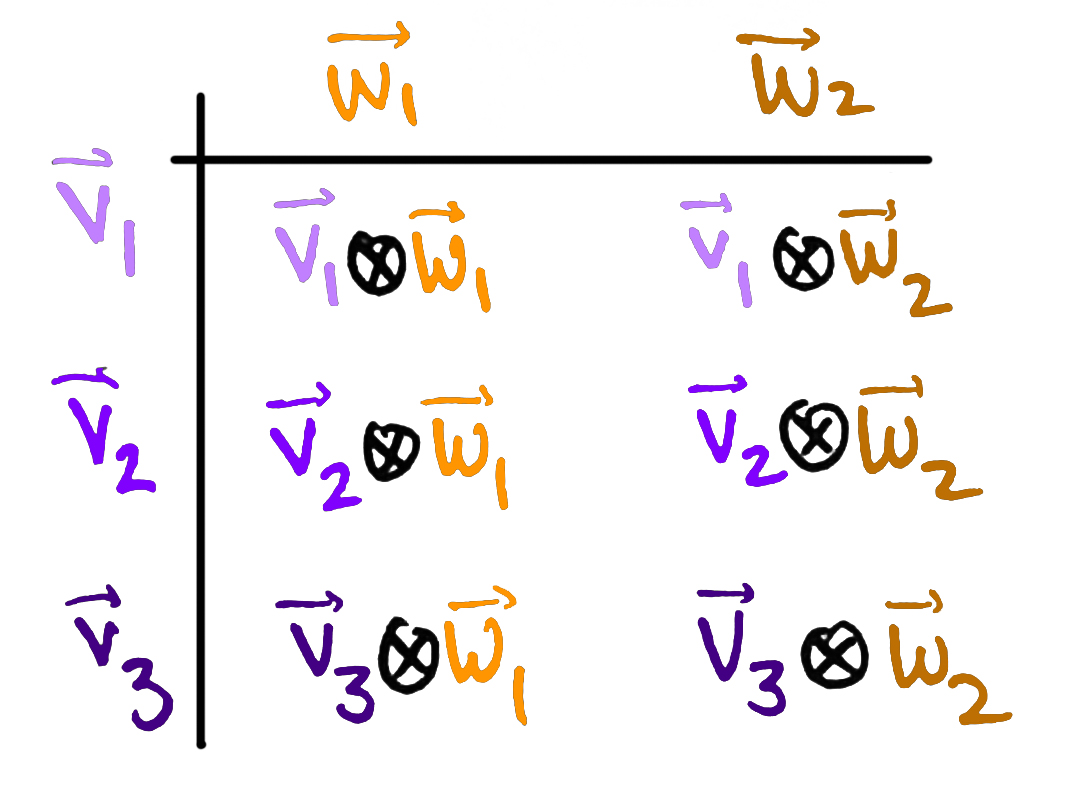

So a typical vector in is a weighted sum of basis vectors. What are those basis vectors? Well, there must be exactly of them, since the dimension of is . Moreover, we'd expect them to be built up from the basis of and the basis of . This brings us again to the "How can we construct new things from old things?" question. Asked explicitly: If we have bases for and if we have bases for then how can we combine them to get a new set of vectors?

This is totally analogous to the construction we saw above: given a list of things and a list of things, we can obtain a list of things by multiplying them all together. So we'll do the same thing here! We'll simply multiply the together with the in all possible combinations, except "multiply and " now means "take the tensor product of and ."

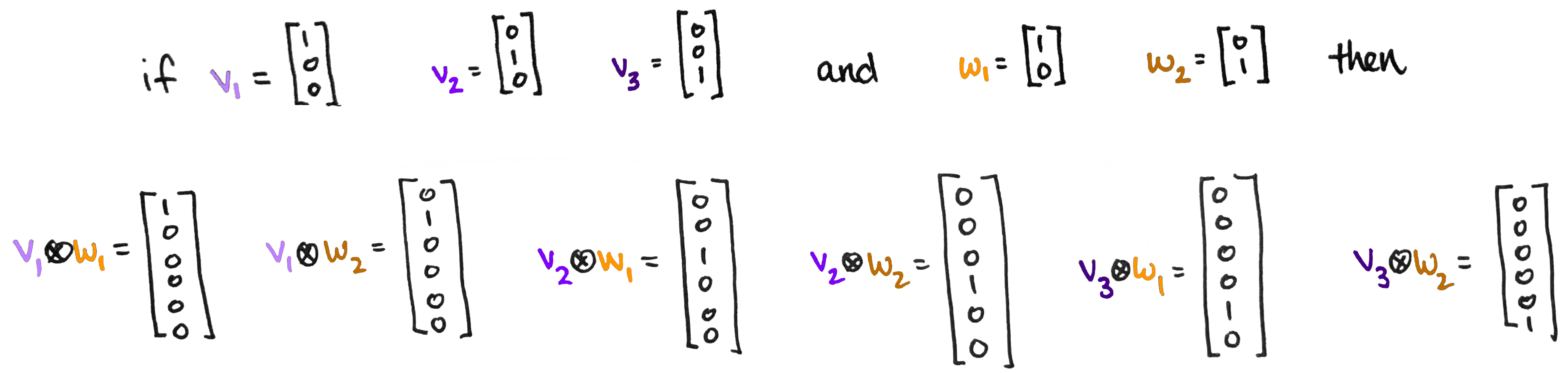

Concretely, a basis for is the set of all vectors of the form where ranges from to and ranges from to . As an example, suppose and as before. Then we can find the six basis vectors for by forming a 'multiplication chart.' (The sophisticated way to say this is: " is the free vector space on , where is a set of generators for and is a set of generators for .")

So is the six-dimensional space with basis

This might feel a little abstract with all the symbols littered everywhere. But don't forget—we know exactly what each looks like—it's just a list of numbers! Which list of numbers? Well,

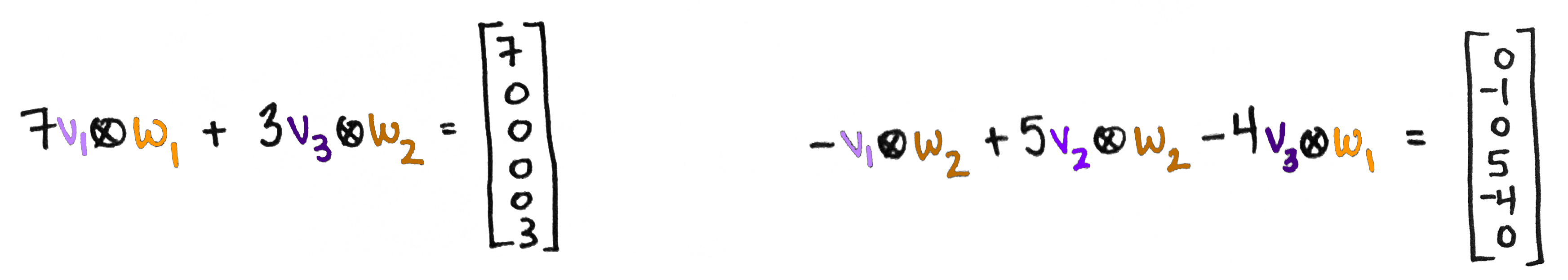

So what is ? It's the vector space whose vectors are linear combinations of the . For example, here are a couple of vectors in this space:

Well, technically...

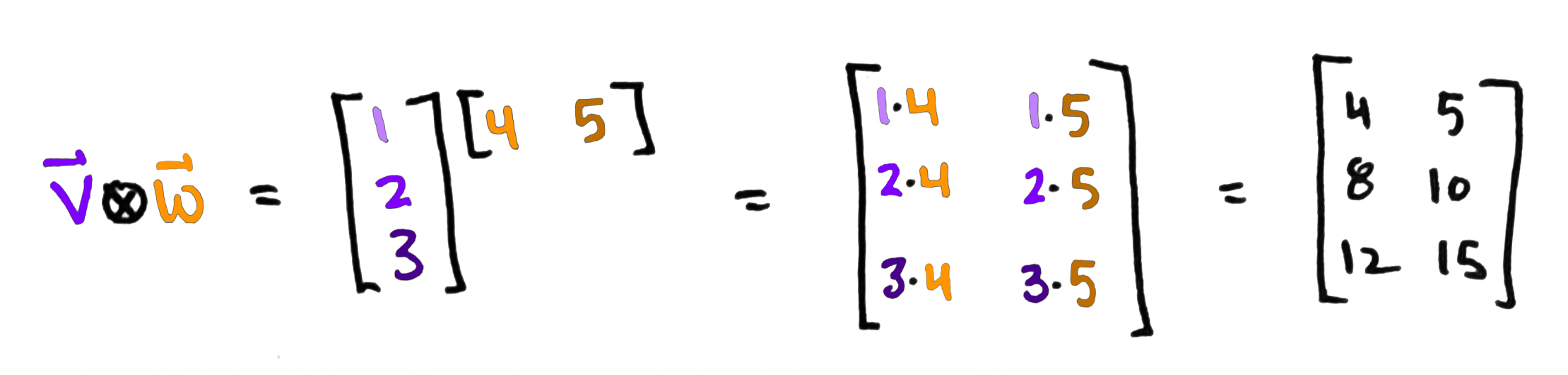

Technically, is called the outer product of and and is defined by where is the same as but written as a row vector. (And if the entries of are complex numbers, then we also replace each entry by its complex conjugate.) So technically the tensor product of vectors is matrix:

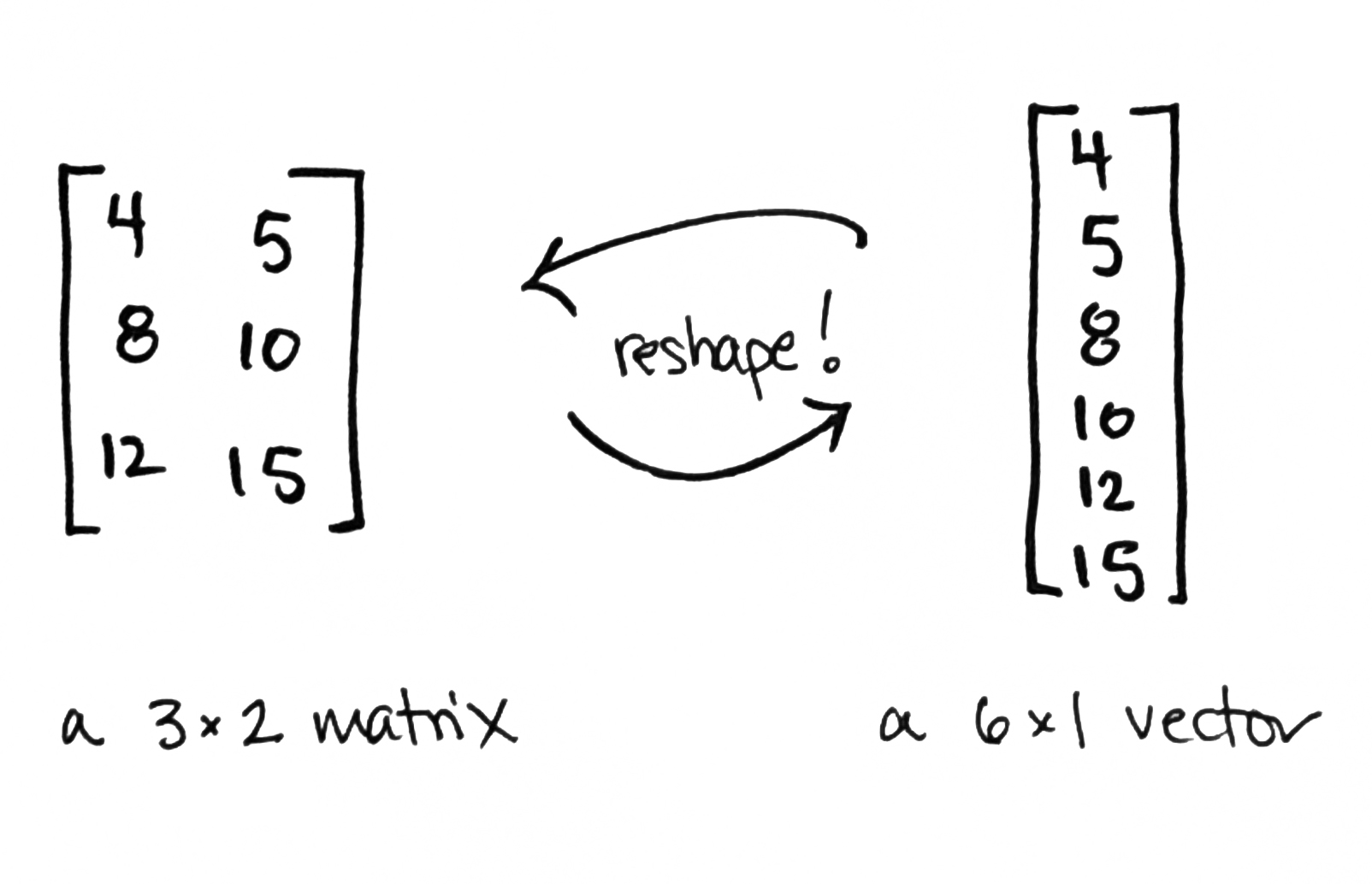

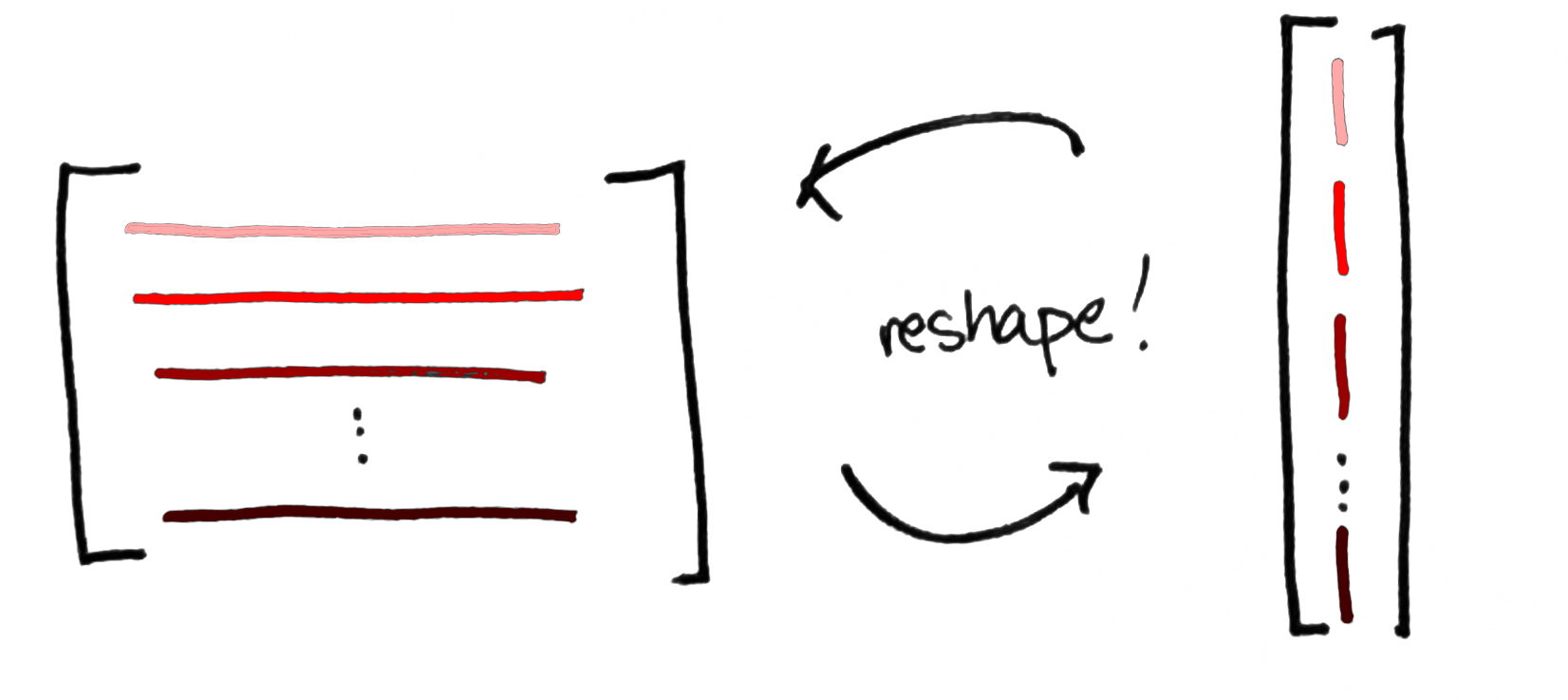

This may seem to be in conflict with what we did above, but it's not! The two go hand-in-hand. Any matrix can be reshaped into a column vector and vice versa. (So thus far, we've exploiting the fact that is isomorphic to .) You might refer to this as matrix-vector duality.

It's a little like a process-state duality. On the one hand, a matrix is a process—it's a concrete representation of a (linear) transformation. On the other hand, is, abstractly speaking, a vector. And a vector is the mathematical gadget that physicists use to describe the state of a quantum system. So matrices encode processes; vectors encode states. The upshot is that a vector in a tensor product can be viewed in either way simply by reshaping the numbers as a list or as a rectangle.

By the way, this idea of viewing a matrix as a process can easily be generalized to higher dimensional arrays, too. These arrays are called tensors and whenever you do a bunch of these processes together, the resulting mega-process gives rise to a tensor network. But manipulating high-dimensional arrays of numbers can get very messy very quickly: there are lots of numbers that all have to be multiplied together. This is like multi-multi-multi-multi...plication. Fortunately, tensor networks come with lovely pictures that make these computations very simple. (It goes back to Roger Penrose's graphical calculus.) This is a conversation I'd like to have here, but it'll have to wait for another day!

In quantum physics

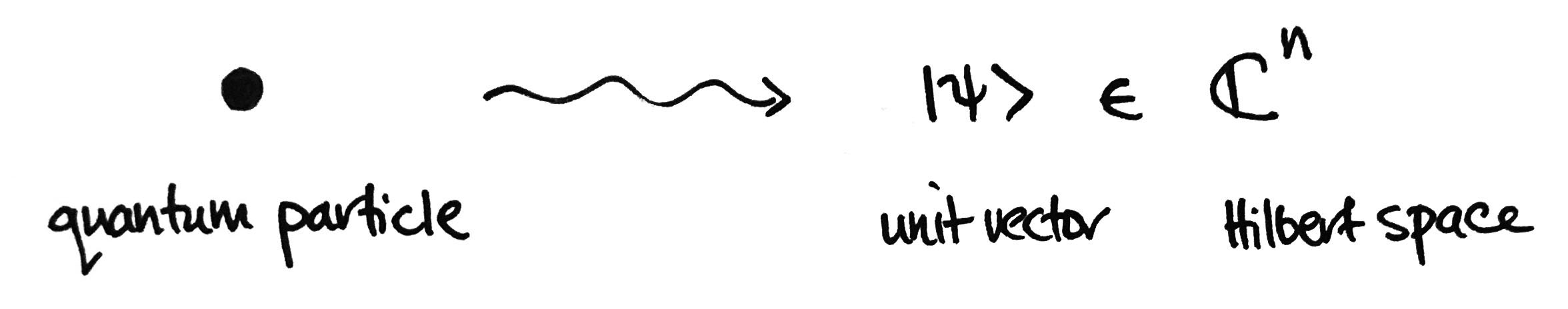

One application of tensor products is related to the brief statement I made above: "A vector is the mathematical gadget that physicists use to describe the state of a quantum system." To elaborate: if you have a little quantum particle, perhaps you’d like to know what it’s doing. Or what it’s capable of doing. Or the probability that it’ll be doing something. In essence, you're asking: What’s its status? What’s its state? The answer to this question— provided by a postulate of quantum mechanics—is given by a unit vector in a vector space. (Really, a Hilbert space, say .) That unit vector encodes information about that particle.

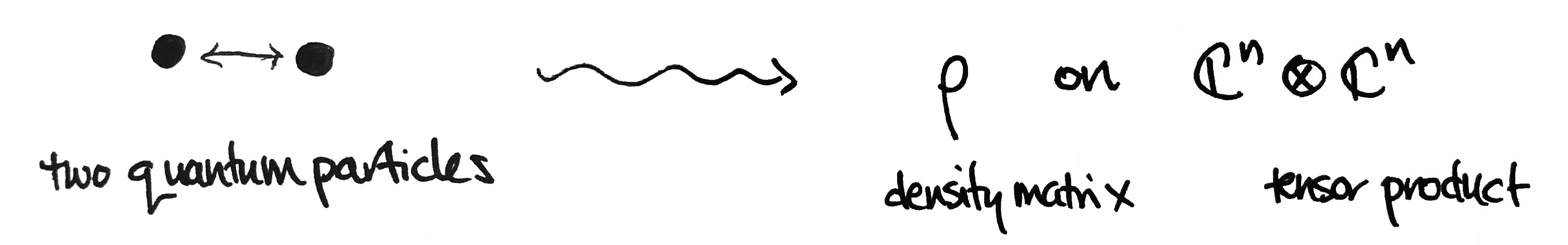

The dimension is, loosely speaking, the number of different things you could observe after making a measurement on the particle. But what if we have two little quantum particles? The state of that two-particle system can be described by something called a density matrix on the tensor product of their respective spaces . A density matrix is a generalization of a unit vector—it accounts for interactions between the two particles.

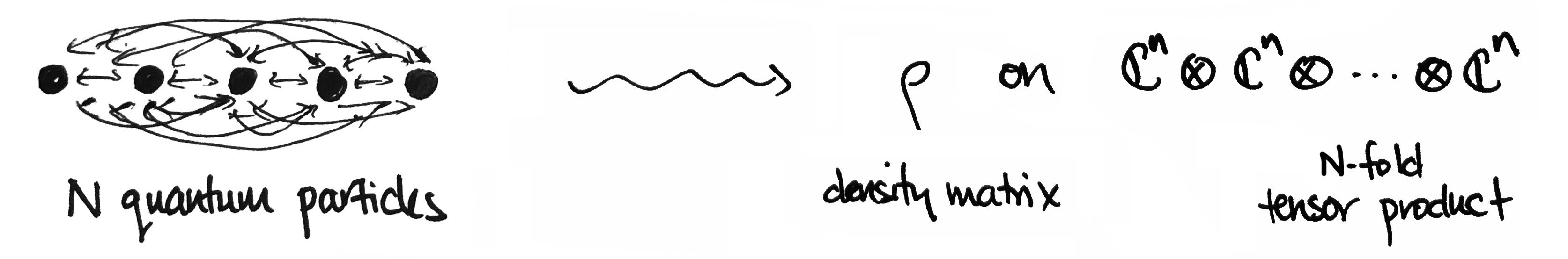

The same story holds for particles—the state of an -particle system can be described by a density matrix on an -fold tensor product.

But why the tensor product? Why is it that this construction—out of all things—describes the interactions within a quantum system so well, so naturally? I don’t know the answer, but perhaps the appropriateness of tensor products shouldn't be too surprising. The tensor product itself captures all ways that basic things can "interact" with each other!

Of course, there's lots more to be said about tensor products. I've only shared a snippet of basic arithmetic. For a deeper look into the mathematics, I recommend reading through Jeremy Kun's wonderfully lucid How to Conquer Tensorphobia and Tensorphobia and the Outer Product. Enjoy!